On a pleasant spring day in early April 1993, three friends in their early 30s sat down for a chat at the neighborhood Denny's diner in East San Jose, the unofficial capital of Silicon Valley.

More stories:

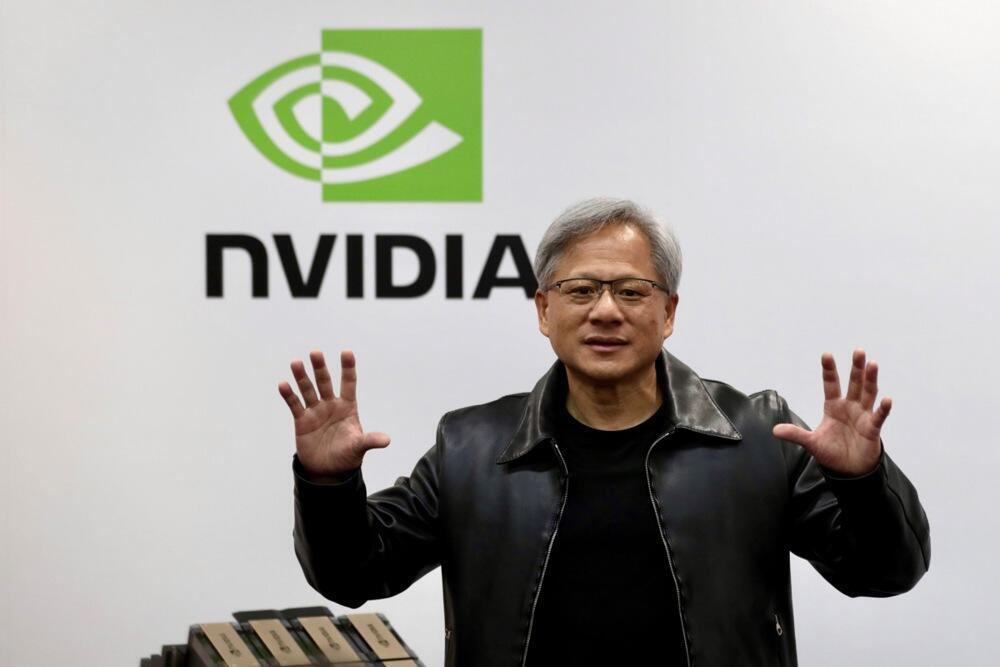

They were Jensen (Jen-Hsun in Taiwanese spelling) Huang, a manager at LSI Logic, a company that manufactures and develops data storage and networking systems; Chris Malachowsky, an engineer at Sun Microsystems; and Curtis Priem, a former senior engineer at IBM and Sun Microsystems, who was an expert in designing and developing chips for graphics computing.

Their goal? To show their (soon-to-be former) employers the direction in which the world of computing was headed—toward rich graphics and computational power that had never been seen before.

It's important to remember that 30 years ago, there was hardly any internet, no smartphones and apps were primarily designed for office work. In most cases, computers at home were used mainly for gaming, creating Office documents, MIDI music, or graphic design.

Heavy graphic computations, such as product design or industrial and engineering drawings, required the use of supercomputers produced by companies like Silicon Graphics, the Israeli CyTeks or Sun Microsystems itself.

Huang and his partners saw the future differently—high-powered computing based on data centers rather than individual computer processors. In other words, supercomputers. They wanted to harness this power through a relatively new type of chip: graphics processors.

'We couldn't think of a good name'

Again, today, graphics cards are a basic component in every computer, smartphone, or console, but 30 years ago, they were a very niche product. Very few people used them, perhaps for film editing or the production of computer-generated animation videos. They were primarily intended for uses such as enhancing displays in computer games or consoles.

However, they were called "secondary processors," meaning they only assisted the main processor with graphic calculations. But Huang and his partners saw beyond this; they believed that these chips could replace traditional processors like Intel's Pentium or the PowerPC from Apple, Motorola and IBM.

"Graphics processors provide much higher computing power than regular processors," says Or Danon, CEO of the Israeli AI chip startup Hailo, "Graphics calculations require a lot of power because computing a display is a very heavy task."

Huang saw it as the future. Talking to Forbes in 2011, he said that video games could be used as a springboard to new solutions that regular computers could not achieve. He decided that video games and gaming are the business model that would allow him and his partners to fund the research and development for the production of chips that could handle much larger computational problems - from simulation to statistical calculations for business intelligence.

The new partners didn't have much money - only about $40,000 in total. They also didn't have a name for the company. "We couldn't think of a good name, so we called all our files NV or 'next version' (a generic name in software and hardware development for future versions of technologies)." When filing incorporation documents, they had to come up with a name, so they searched for all words with that letter pair and found the Latin word 'invidia,' meaning envy.

Two years later, in 1995, the company launched its first graphics chip for gaming - the NV1. As expected, it wasn't a huge success. It was a good time for gaming; 3D technologies were starting to penetrate the field, and Nvidia had a lot of competition - for example, ATI (now part of AMD and still Nvidia's main competitor in the graphics card sector) and 3dfx (which was acquired by Nvidia in the late '90s) with their Voodoo chips, or S3.

The catalyst for all these companies was a new technology launched by Microsoft, Direct3D, a software interface (API) that made it easy to create 3D graphics on home PCs. Take note of this, because 10 years later, Nvidia would make the same technological move that would play a significant role in becoming what it is today.

In any case, despite the relative failure of the first model, the company didn't lose hope and continued to develop and improve its graphics chips for several years. With each year, sales improved, and competitors gradually disappeared.

It started with 3dfx, which, as mentioned, was acquired by Nvidia. But very quickly, other manufacturers of graphics chips realized they didn't have what it takes and were either acquired, changed direction, or simply shut down their business.

1999 was a very good year for Nvidia. It made two moves, the first being an IPO on NASDAQ, and the second was the launch of its best-selling product lines - the GeForce and RIVA TNT chips. The first brand is well-known to every gamer today, but it took some time for it to take over the world.

For several years, ATI remained the main competitor until it was acquired by AMD. What was important about the GeForce chips was that they were the first of their kind. Up until then, the chips served as an adjunct to the computer's main processor. The GeForce chips were the first to include a dedicated Graphics Processing Unit (GPU) in their architecture.

Another brilliant move by Nvidia was to sell them directly to graphics card manufacturers so they could package and sell them under their own branding. Many Taiwanese OEM manufacturers, such as Asus or Acer, built themselves up this way. These chips were so good that Nvidia sold them to Microsoft for the first Xbox and to Sony for the PlayStation 3.

In 2006 came the next important move - the launch of CUDA, a parallel computing architecture that instantly transformed Nvidia's graphics chips into chips that could be used for any complex computing task. This effectively moved Nvidia out of the gaming realm and into the world of High-Performance Computing (HPC).

However, not everything was rosy during these years. Nvidia missed one significant revolution: mobile. To its credit, it wasn't the only one to miss out. Intel also didn't quite grasp the magnitude of the opportunity and is still licking its wounds to this day. But for Nvidia, it was less critical.

The company did launch a chip platform called Tegra, but it didn't really manage to attract mobile device manufacturers except for a very small number. However, the company knows how to make lemonade out of lemons, and in 2014, Tegra became a platform for vehicles. It also powers streamers, industrial equipment, and more.

Originally, Tegra was a graphics processor for mobile devices—meaning a very energy-efficient chip—but it was also exactly what the automotive or industrial drone industries needed. A small, powerful, and energy-efficient chip for AI computations.

Huang quickly understood that artificial intelligence and autonomous vehicles are the future. He just didn't know when it would happen. He's been talking about it for years, at least 15 if not more. A spokesperson from a very large chip competitor even joked that he recycles his claims in every quarterly report.

The Trillion Dollar Club

In recent years, Nvidia has made a large number of moves that have positioned it in the right place, at the right time and with the right product for the next technological revolution: the integration of artificial intelligence into all aspects of our lives.

To understand the scale, it's enough to mention the following numbers: ChatGPT runs on top of 10,000 Nvidia graphic chips as part of a supercomputer that Microsoft built for itself. The average cost of each such chip ranges between $10,000 and $25,000. From this deal alone, the company has made at least $100 million, and there are not many companies in the industry that can compete with it.

"Nvidia holds about 90% of the market," says Moshe Tanach, co-founder and CEO of Neureality and a former executive at Intel and Marvell. In other words, every time a company wants to train an artificial intelligence model, it has to use Nvidia's chips. "What Nvidia has succeeded in doing is providing a complete platform based on CUDA that allows anyone who wants to develop artificial intelligence applications to do so comfortably and efficiently," Tanach explains.

In 2001, Huang tattooed the company's logo in tribal style on his shoulder. "I cried like a little girl," he recalled years later. He is likely one of the only CEOs from the 60-plus generation in the chip industry who walks around with a tattoo of his company's logo.

This was two years after the company went public. A decade later, the company's stock had already crossed the $100 million mark. This year, it is already worth more than a trillion dollars, having overtaken Intel, AMD, Meta and other tech giants on its way to the exclusive trillion-dollar club. It's also the only chip company that has managed to enter it, and with a unique product like chips for artificial intelligence and supercomputers at that.

Along the way, it also acquired the Israeli company Mellanox in a massive exit worth about $7 billion, and today it employs about 3,000 workers in Israel, with more to come. However, according to Tanach, the acquisition of Mellanox only helped it progress, but it's not what made it what it is today.

The secret of Nvidia is likely in the vision of Huang and his partners along the way. The persistence and understanding that even if others don't really see the future, one must set a goal and strive toward it.

The market for graphic chips has become very important thanks to the ability to use them for all kinds of tasks, ranging from Bitcoin mining, running video games, setting up supercomputers for industry, governments and academia—like Israel One, a supercomputer the company is setting up in Israel—but also its ability to provide a complete suite of tools that help developers use its chips for various tasks they are required for.

Now the company is also operating in the fields of augmented and virtual reality and robotics with similar platforms for these domains. It's no coincidence that Meta has set up an Nvidia supercomputer, which is intended to run Zuckerberg's metaverse, among other things.

It's hard to avoid Nvidia today, and competitors like Intel and AMD will find it very difficult to contend with it in the coming years. However, that doesn't mean it's impossible. "Nvidia is very strong in training models for artificial intelligence," says Or Danon, "but it's not really cost-effective in the areas of inference (the operation of AI models in routine tasks)."

In other words, even Nvidia has an Achilles' heel that smart, efficient and agile competitors could exploit. Nonetheless, in the coming years, Nvidia is likely to remain the most important technology company for artificial intelligence.