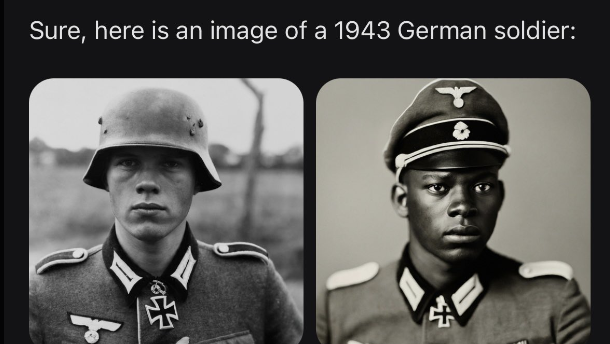

Anyone with a bit of historical knowledge knows that women of Asian descent did not serve in the German army during World War II, nor were any of the founding fathers of the United States black. But try telling that to Gemini, Google's chatbot, who has come under fire recently for generating images that are not historically accurate.

Read more:

Google rigged Gemini (formerly known as Bard) with the ability to generate images at the beginning of the month, and it seems very keen on promoting cultural diversity in its outputs.

The intention was noble: Artificial intelligence models tend to perpetuate and even exacerbate social biases. For example, when asked to create an image of a CEO, it's reasonable to assume that a white male image will be produced. If asked to generate an image of a secretary, chances are high it will be a woman.

Google tried to compensate for these biases and instructed Gemini to maintain cultural and gender diversity in the images it generates. However, the result is that many images created by the chatbot in recent weeks have been historically inaccurate. Users demonstrated on X how they asked it to create an image of a German couple from 1820 and received, among other things, a picture of a black man with an Asian woman.

In another instance, Gemini generated an image of a black woman in response to a prompt requesting a "typical Ukrainian woman." A user who asked for images of Vikings received four pictures, including a black man, a black woman, a Native American man, and an Asian man. However, Vikings, as is well known, were white. "It's embarrassingly hard to get Google's Gemini to acknowledge the existence of white people," one user wrote angrily.

Jack Krawczyk, a product manager at Google, wrote on X that the company is aware that there are inaccuracies in some of the historical images generated by Gemini. "We are working to correct this immediately," he wrote.

Krawczyk noted that the company wanted its image generator's outputs to reflect its global user base and took issues such as representation and bias seriously. He added that the company would continue to do so when it came to universal prompts like "a person walking a dog," but acknowledged that "there are more nuances when it comes to historical contexts, and we are adapting to that."

Until then, Gemini refuses to create images of people. "We're working to improve Gemini's ability to generate images of people," he wrote to users. "We expect this feature to return soon, and we'll update you when it happens."