The now-famous ChatGPT chatbot stormed onto the internet in November 2022, making AI models and advanced language processing tools available in every home.

Other stories:

It then quickly became the fastest-growing consumer application in history, with over 100 million monthly active users, accelerating investments and competition around generative AI to new heights, and leading changes in many industries and professions worldwide.

8 View gallery

ChatGPT is the fastest-growing consumer application in history.

(Photo: trickyaamir, Shutterstock)

One of the biggest appeals of AI to consumers is the "mystery" of how it operates in the first place. However, as public, and economic interest in the technology continues to grow, regulators are becoming more and more involved, and for them the mystery and vagueness of this technology go against their desire for clear answers and strong regulative laws.

As talk about the positive aspects of these applications begins to touch on the dangers and harm they could possibly cause, regulators and lawmakers around the world are beginning to pay more and more attention.

Will technology giants soon be forced to restrict or slow down developments in the field? These are some of the steps being taken today around the world.

Europe one step ahead in regulating AI

When it comes to technology regulations, Europe appears to be one step ahead. In late March, the Italian Data Protection Authority, or Garante ordered OpenAI to remove ChatGPT from Italy's cyberspace.

The step was a safety measure while the authority began examining the possibility of privacy violations and data protection laws in Europe against the popular application.

ChatGPT mainly uses information from the internet for training its artificial intelligence model, but in Europe, even if that information is defined as public, if it concerns individuals this may be considered a violation.

Meanwhile, ChatGPT has resumed operating in Italy, with the company announcing several changes. Among other things, the company said it will lead a more transparent policy and will file a form which EU users can use to object to the use of personal data to train AI models.

In addition, users are now asked to confirm when registering for the application that they are over 18 or over 13 with parental consent to use the service – which was another concern raised by the regulator.

The company told Italian authorities that it is currently unable to fix an issue with the application in which it makes up inaccurate information about individuals and will therefore allow the individuals to erase any data they consider to be inaccurate.

The service is currently operating under the initial conditions set in Italy and has resumed operation, but according to the authority the investigation into the application continues.

Italy also expects OpenAI to meet additional requirements – such as integrating an age verification system and launching a local information campaign that explains how the application processes the data of Italian citizens and shows them how they can deny the service doing so.

8 View gallery

OpenAI will have to meet additional requirements to continue operating in Italy.

(Photo: kovop / Shutterstock.com)

Italy's actions raised interesting questions about the possible use without consent by the AI model of personal data, including information given to the application during a conversation.

It's not just Italy. Authorities in Spain, France, Germany and Ireland also are investigating the collection and use of data by social media platforms.

There are also broader actions planned in Europe. The European Data Protection Board, which unites Europe's data privacy authorities, established a task force on ChatGPT to coordinate investigations and enforcement around AI chatbots, which could lead to a united policy against such apps.

Additional steps may also be taken in Europe, including fines and data deletion obligations by the company, with other Western states expected to adopt and follow European Union regulations on the matter.

Privacy is not the only challenge

The challenges of OpenAI and other companies against European regulation do not concern issues of privacy alone. The EU currently is formulating the first comprehensive set of AI laws in the West, which is expected to pass in late 2023.

According to reports, AI tool developers will be required to specify which copyrighted materials are being used to build their systems, allowing the original creators to demand monetary compensation for their use.

The EU also will add requirements that will obligate developers to build models with means that will prevent them from generating illegal content. This marks a change in the legislative approach, as until recently the law's goal was to focus on malicious AI applications rather than on the models themselves.

The US moves against AI

Technology regulations tend to be much more lax in the United States, and the country also has a significant interest in competing with China in the field of AI. Nevertheless, there are several emerging moves b the U.S. against the new technology.

This week, CEOs of OpenAI, Google, Microsoft and the start-up company Anthropic met with U.S. Vice President Kamala Harris and other senior officials to discuss AI safety measures. Harris wanted to convey that companies have a responsibility to prevent potential harm while using the apps.

8 View gallery

U.S. Vice President Kamala Harris met with the CEOs of OpenAI, Google, Microsoft and Anthropic

(Photo: AP)

On the same day, the White House announced a $140 million investment in developing new AI research institutes, and said it expects companies to agree to reviews of their products at the DEF CON cybersecurity conference to be held in August.

Other senior members of the U.S. government also have spoken out about AI regulation, such as Lina Khan, who heads the Federal Trade Commission. The Commerce Department signaled last month that it is considering requiring a pre-use review process for AI models.

Chuck Schumer, the U.S. Senate Majority Leader, also recently announced an effort to pass legislation on AI models addressing concerns in national security and education.

According to Schumer, the regulation's purpose is to "prevent potentially catastrophic damage to our country while simultaneously making sure the U.S. advances and leads in this transformative technology," and called for bipartisan collaboration to promote the planned legislation.

Under Schumer's proposal, companies will be required to allow independent technology experts to examine artificial intelligence apps before releasing them to the public or updating them, and give users access to the reports and findings.

The threat to AI generative tools in the U.S. comes from an unexpected direction. The U.S. Supreme Court is currently examining whether Section 230 of the Communications Decency Act, which shields platforms from liability for content posted by their users, should also protect recommendation algorithms.

The decision, expected to be made next month, will determine whether recommendation algorithms are considered content creators themselves.

Should the court rule that the law doesn't protect these algorithms, there may be implications for AI generative models, which could be exposed to defamation lawsuits and privacy violations.

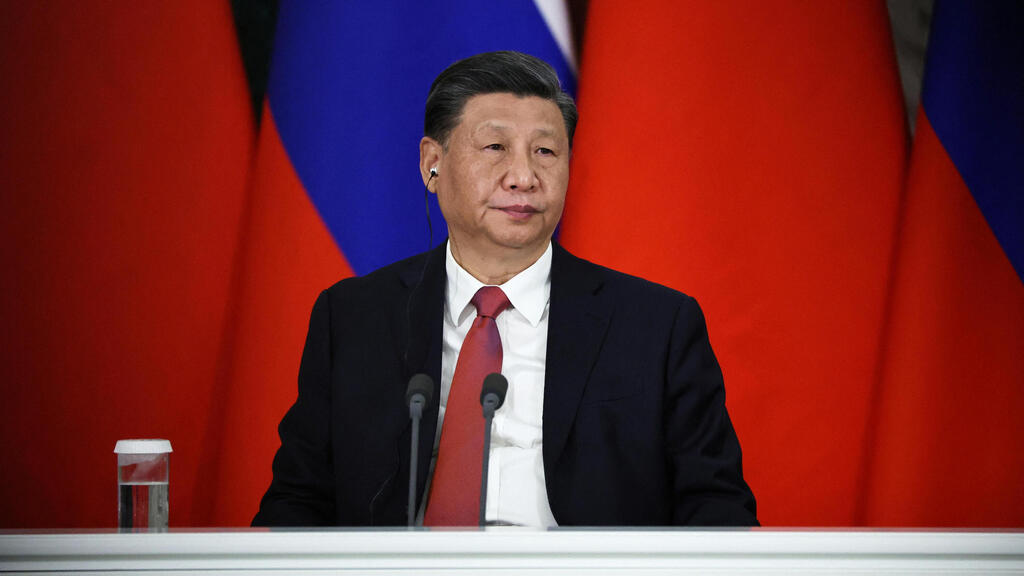

China already regulates AI products

China has already drafted laws to regulate the development of AI generative products by leading companies. The motivation for regulation in China is entirely different, however.

Among other things, AI developed in the country is required to reflect socialist values in the content it produces – as stated in a draft released by China's cybersecurity chief outlining the legislation's guiding principles.

This hints at China's practice of censorship, which is based on preserving and promoting the government's ideology.

The laws state that generative artificial intelligence will not attempt to undermine the state's power, overthrow the socialist system, or promote terrorism, extremism, ethnic hatred, fake news or content that may "disrupt social and economic order."

The data used to train the models should not violate people's privacy, according to Chinese law. The legislation also includes the possibility of fines or suspension of activities for repeat offenders. According to Bloomberg, China plans to conduct security tests on generative AI services before allowing them to be widely launched in the country.

The laws were passed after Chinese companies such as Alibaba and Baidu revealed their alternatives to ChatGPT, but before they were released to users in the country.

In China, like in the U.S., there's an interest in balancing innovation with regulation. Therefore, the country is interested in promoting the development of local AI alternatives and is also likely to restrict foreign services from use by the Chinese.

The first examples of censored generative AI were seen with Baidu's image generator, ERNIE-ViLG, which refused to create images of Tiananmen Square and political leaders, tagging these contents as "sensitive."

Other countries call for AI regulation

Aside from these moves, there have been additional calls for regulation. Japanese Prime Minister Fumio Kishida said ahead of the G7 summit that "international rules need to be created," causing the ministers of G7 countries to call for adopting AI regulation.

Another call came from the Norway hedge fund manager Nicolai Tangen, who stated that governments should expedite regulations on AI. The fund, valued at $1.4 trillion, is set to publish guiding principles in August on how the companies in which it invests should ethically use AI.

The fund invests in around 9,000 companies, including technology giants like Apple, Alphabet, Microsoft and Nvidia.

Back in March, a letter signed by Elon Musk and a line-up of technology officials, including Apple co-founder Steve Wozniak, IBM's chief scientist Grady Booch and Israeli historian Yuval Noah Harari, was released pushing for AI regulations.

The concerns raised in the letter were much greater than just privacy infringement, copyright issues or national values. The letter called for a halt to AI system training for six months.

"Recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict or reliably control," the letter stated, adding that, should companies not voluntarily stop their work, “governments should step in.”

The letter warned of possible apocalyptic scenarios.

"Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders."