"The machines will do all the work, leaving mankind with nothing meaningful to do. We will become passive and dependent, losing our purpose and sense of fulfillment," Bertrand Russell wrote in 1935.

More Stories:

Russell was one of the most important and influential philosophers of the 20th century; he, like many before and after him, exaggerated fear of technology and especially of what it could do to humanity.

Of course, there is some truth in his words, as well as those of others, whose bleak forecasts can be found even in the pages of this newspaper: every technological leap has positive and negative effects, but it is clear that for the vast majority, advanced technology brings with it many more positive developments than negative ones, and the latter can be reduced and contained.

Unless you've been living under a rock, you have probably been exposed to the wave of advanced technology sweeping society in recent months: the "AI" wave. These learning algorithms, which are generally referred to as "artificial intelligence", are primarily based on large language models that are capable of conducting a human-like conversation on a variety of complex topics, purposefully summarizing many sources of information, and even producing content (such as songs, rhymes, scripts, or software code) based on external prompt.

But simultaneously with the growing popularity of these impressive tools across the general population, it is difficult to ignore the flood of opinions, statements, articles, letters and petitions warning of a new existential threat.

Just as the fear of machines during the industrial revolution and the fear of computers in the 20th century were proven wrong - the same will be true of what we call today "artificial intelligence". I will explain why.

The concerns can be divided into three main groups: the first - and the one that attracts the most headlines - is the fear that AI will "take over humanity". The fear, as we have read in books and seen in movies, is that machines become sophisticated to the point of developing a non-human consciousness motivated to control resources and end up becoming more sophisticated than humans, replacing them and subduing them.

The second is the fear that AI will eliminate many jobs and cause unprecedented unemployment - especially among white-collar professions (lawyers, accountants, and even doctors). And thirdly, the fear that AI will bring about a fundamental change for the worse in the manner, nature and authenticity of public discourse.

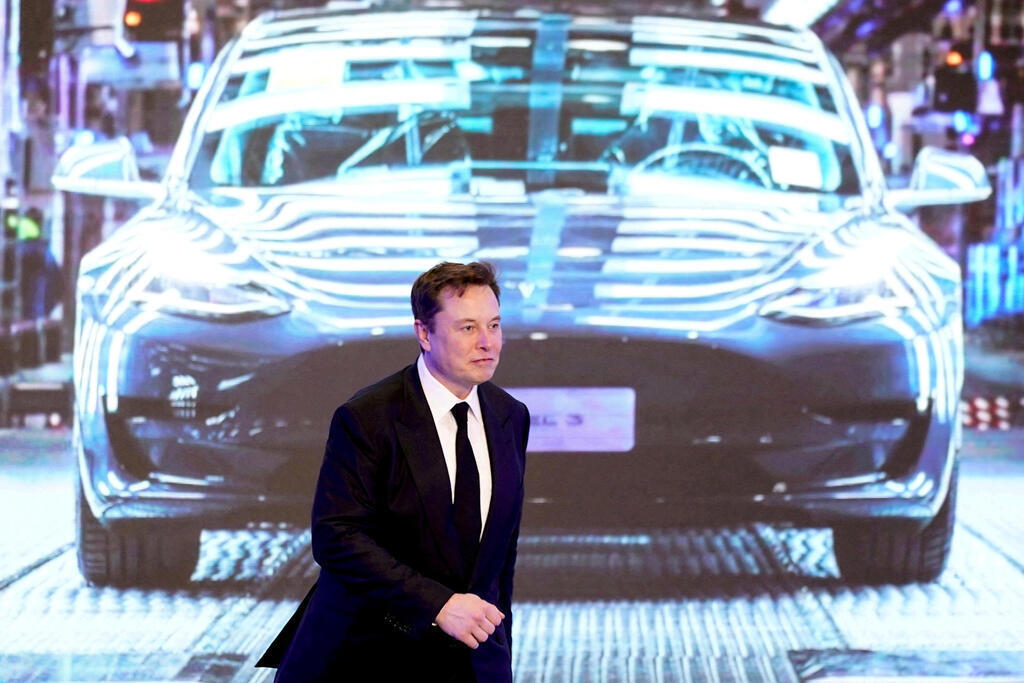

As for the first, main concern - which was expressed by Elon Musk, Steve Wozniak, Yuval Noah Harari and others in the petition calling to pause AI development for six months – we can and should relax.

In short, there is no reason to fear AI, if there really is no AI. The truth is, the fact that we call something "AI" time and again does not make it real AI. Humans tend to attribute objects with qualities or characteristics, in a kind of reflexive personification. This is a known flaw that stems from our amazing ability to automatically formulate a theory of the mind - that is, to put ourselves in another's shoes and feel their feelings, even if it is an object.

When a person sees a drawing of a triangle running away from a square on the screen, he instinctively thinks that the square scares the triangle and that the triangle is indeed afraid of him.

This is what is happening now with language models like ChatGPT that have taken over the world: while it is a completely soulless system, we tend to associate it with abilities or intentions that it does not possess - because it mimics human behavior in an astonishing way.

In fact, on a philosophical level, ChatGPT is more like a giant calculator, or a massive dictionary, than an intelligent creature. It lacks awareness - and therefore also intelligence. There is an agreement on this among the scientific community.

Algorithms do not have the ability to control their actions, they do not have the ability to influence how they learn, they are not able to assign any value (positive or negative) to a particular situation or interaction, they cannot invent something "new", and they do not have the ability to respond to things they have not been exposed to (or a variation of them) before. These abilities are the cornerstone of cognition and awareness.

At the end of the day, these are very sophisticated and remarkable methods to produce sentences that make statistical sense, and nothing more. In many ways, a bee has more intelligence than ChatGPT - and certainly more desires, goals and cognitive abilities.

One of the most repeated (and incorrect) claims is that artificial intelligence has "achieved mastery of language". This claim indicates a complete lack of understanding of what language is, what human fluency in language is, and in fact what intelligence is. The systems we call AI do not really have cognition, goals, or language abilities. There is a huge difference between how humans understand language and how these machines are built.

The best way to think about these algorithms is as a huge dictionary, with a huge number of indexes, that knows how to find a sentence that has been said or written in the past (or a sentence that is statistically similar to one) - and pair it with an appropriate answer, on a statistical basis as well.

The best way to illustrate this is the Chinese Room, a thought experiment proposed by the philosopher John Searle in 1980. The Chinese Room Experiment predicted the existence of a ChatGPT-type algorithm and assumed that it would be able to respond to any input in Chinese with a "logical" output, also in Chinese.

In the next stage, Searle claims, he enters the room with an English operating manual of the algorithm, receives input in Chinese, follows it according to the instructions in the English manual, and produces a Chinese output which he takes out of the room.

On the one hand, to those outside the room, it will appear as if Searle is implementing the algorithm and producing an output considered "intelligence", but on the other hand, it is clear that Searle does not know a word of Chinese, and he is simply following the instructions.

This argument is intended to prove that the mere ability to give rise to an answer does not imply a true understanding of its content, as we intelligent people understand it.

The fear of revolutionary technology is a recurring element of human thought. We live in a web of cause and effect, observation and explanation. As soon as people are exposed to a phenomenon that does not fit with known causality, or with a plausible explanation, our initial reaction is fear. We tend to fear what we cannot explain.

One can only imagine how our ancestors reacted when they were first exposed to a magnifying glass, or when the inhabitants of a remote village were first exposed to a motorized car. In fact, one documented example is the reaction of indigenous tribes in the Amazon to a helicopter flying in the sky, thinking it is a god.

Those of us who have been working with machine learning models for years know that these are elegant algorithms, with amazing abilities to identify patterns and correlations, analyze text and even algorithms that know how to pursue a specific goal and play chess or Go (sometimes even better than humans). But it is not conceptually different from other cases where technology surpasses our abilities, just as Waze has better navigation ability than humans, and a calculator has better arithmetic abilities than ours.

This is the essence of technology. It is indeed exciting when a machine beats a person in a board game or writes a script for an episode of Seinfeld, but this does not mean that these models have souls and desires, or that we can attribute them with intentions and wishes.

"The fear that language models will want to take over the world is similar to the fear that a merry-go-round horse in an amusement park is trying to catch up with the horse in front of it"

It is important to note that while the scientific community was indeed surprised by the capabilities of the new generation language models, these models do not represent a significant scientific breakthrough. Their groundbreaking algorithms existed for many years (in some cases for decades).

So, what has changed lately? These models have indeed managed to grow exponentially in their level of engineering complexity (for example, the number of parameters) - which gave rise to the ability to process much more data and respond to instructions with precision.

At the same time, the fear that language models will want to take over the world is similar to the fear that a merry-go-round horse in an amusement park is trying to catch up with the horse in front of it. This is not to say that algorithms without intelligence or soul cannot cause damage. Of course they can.

It is enough to imagine that we will allow these algorithms to control military decision-making to understand that the world could deteriorate into unnecessary wars due to the 'logical justice' of a machine. The responsibility for controlling and limiting these algorithms' decision-making domain lies with the international community, with governments around the world, and in fact with society as a whole.

As for the second concern: historically, any significant technological improvement has produced a radical change in the way certain jobs are performed. Think of the farmer when the plow was invented, or the tailor when the sewing machine was invented.

Throughout history, the effect of new technology on GDP has been positive (and in fact, only through technological progress has it been possible for GDP to grow significantly). The results for society and the economy were more jobs and more productivity, and not the other way around - and that will also be the case this time.

What will change of course is the way of working: it is true that lawyers will have to learn to work with ChatGPT in order to, for example, review and amend contracts more efficiently and quickly, and so on.

The third concern surrounding language models is the fear of a significant change in the way information is produced, organized, distributed and consumed in society; fake news on AI steroids. This is a legitimate and important concern.

Humans who use these algorithms may use them for malicious purposes, by impersonating other humans, spreading false or misleading information, engaging in emotional manipulation, and more. That is, just like what happened with the networks and the Internet giants (which have been using similar models for a very long time), only on a more extensive and significant scale.

They say guns don't kill people, people kill people. This is also true in this case. There are many ways to deal with this concern, and they should probably be realized simultaneously, both on the technical level and on the social, cognitive and regulatory levels. This is precisely why there are regulatory bodies and experts in technology, media and society.

For example, deliberate disinformation should be a very serious offense, especially if it is produced by politicians, public figures or media organizations.

As long as these models continue to grow, this will be an important time for apolitical and non-state regulatory bodies to take on a very significant role in researching the truth, establishing reliable sources, and listening to public criticism.

Furthermore, the various sources of authority (scientists, academics, journalists, each in his own field) will have to be a more significant and critical layer than ever before, in the way human society consumes information and conducts itself.

Dr. Zohar Bronfman Photo: Ohad Mata

Dr. Zohar Bronfman Photo: Ohad MataFinally, technology can also serve society in this instance. It is possible to build systems that monitor the activity of models and warn against deviations from accepted norms or from their desired action.

Like any breakthrough technology, GPT and other language models create a new reality, and as such require broad attention and reflection from us as a society. But these models are far from imitating or replacing the human spark, the spark that invented these models and many other inventions that changed our lives. The responsibility to preserve this unique spark is on us - and the solution to this is not to halt development, stop research or ignore the existence of advanced language models. It's time to stop spreading bleak forecasts.

- Dr. Zohar Bronfman is a leading expert in artificial intelligence and CEO and co-founder of Pecan.ai.