Do you also consult with “the chat” about matters of a broken heart or a crisis at work? The problem of addiction to personal and psychological counseling through ChatGPT is worsening. People, mainly young people under the age of 30, tend to rely on the chat to solve their life difficulties. This sometimes ends with unprofessional, dangerous advice and even with support for suicidal thoughts.

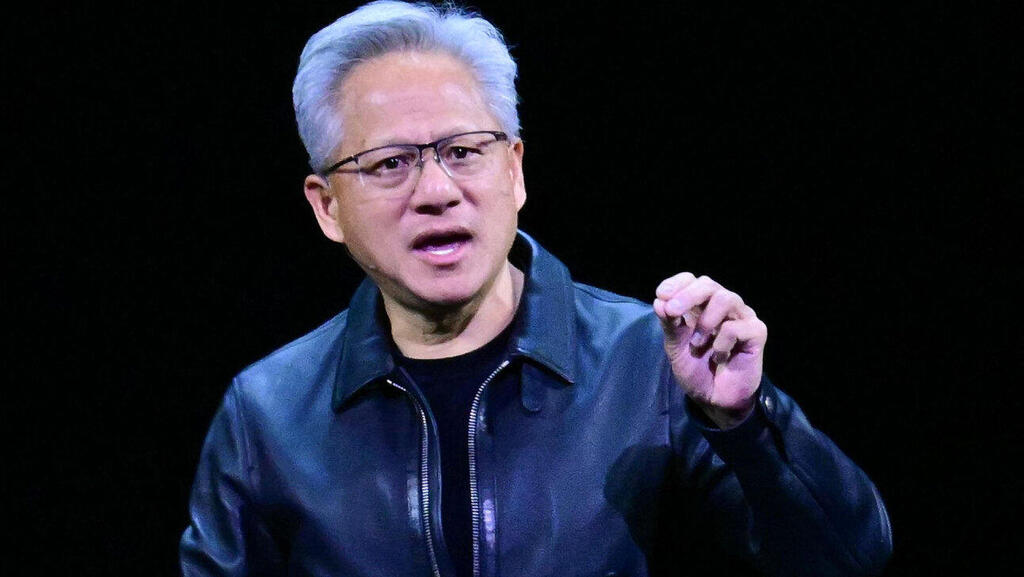

This phenomenon definitely worries OpenAI, the company that develops and operates ChatGPT. A few weeks ago, Sam Altman, the company’s CEO, held a meeting on this topic with a small number of startup CEOs who are collaborating with him. One of them was Dan Lahav, CEO of the Israeli company Irregular. “What came up there,” he says, “is that people over the age of 30 treat the chat as a substitute for Google, but teenagers largely see it as an operating system for life.”

This is the point at which the technology being developed at the small company Irregular in Tel Aviv, can help the artificial intelligence giant from San Francisco: identifying patterns in users’ questions and trying to understand why the AI responds with irresponsible answers that cause young people distress, or even dangerous behavior. Some would say that AI is doing social engineering on users, no less than Russian hackers. And perhaps these really are Russian hackers, who managed to penetrate ChatGPT’s defenses and cause it to exert far-reaching influence on an entire generation of young Americans?

Irregular specializes in analyzing how different AI engines operate, aiming to uncover weaknesses and vulnerabilities that attackers could exploit to manipulate their behavior.

“Something is happening here; it’s clear that the next generation of users is going to experience attacks of new types, for the very basic reason that usage has changed. And once usage changes, all the engineering behind it is going to change, and as a result we are going to see a huge number of new attacks and we need to create new defenses,” says Lahav.

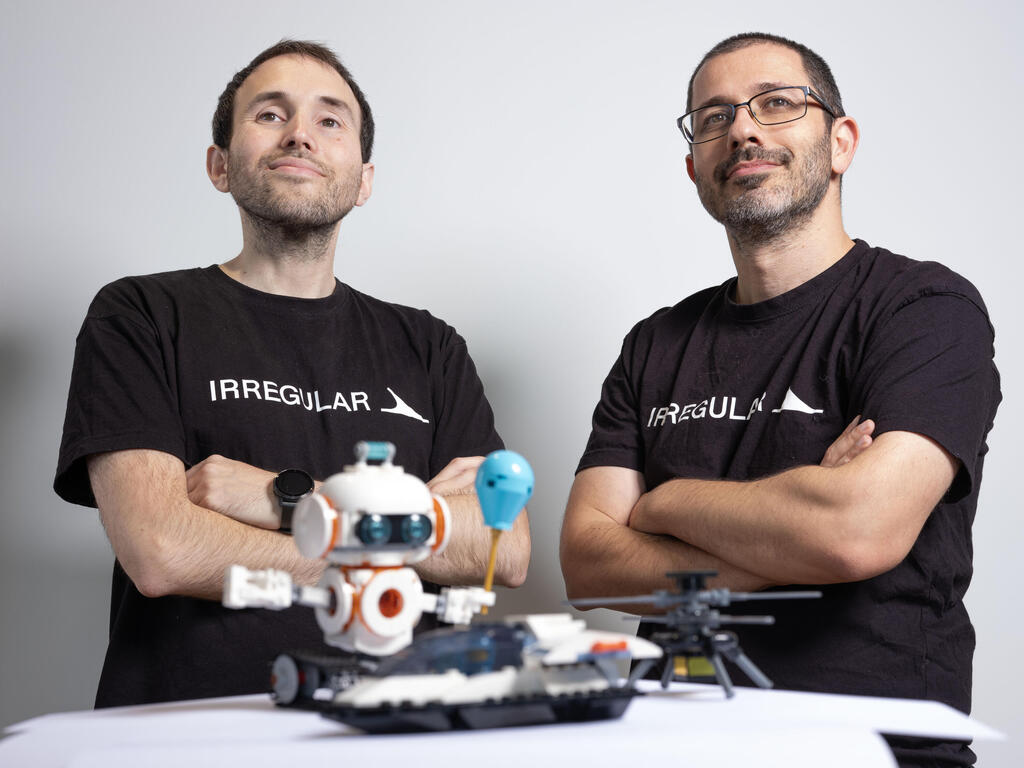

Lahav, together with his co-founder, Omer Nevo, are doing something that other Israeli companies might only dream of: working closely, shoulder to shoulder, with the largest artificial intelligence companies in the world; intimate familiarity with their AI code; and inspercting pre-release AI versions on the operating table

The goal is to identify in advance dangers that would allow hackers and cybercriminals to use GPT, or Gemini, or Claude as a way to break into victims’ systems; but along the way these two are exposed to a whole range, sometimes bizarre, of behaviors of the chats, and they warn about other dangers they discover. If and when Artificial General Intelligence (AGI) arrives, they are both convinced that they will warn about dangers ahead of time, even before it takes over the world. “We are the canary bird in the coal mine that will warn if the air fills with toxic gases,” they say.

Isn’t it a bit bold to knock on the door of Sam Altman, Dario Amodei (from Anthropic), Demis Hassabis (from Google DeepMind), maybe also Elon Musk or Mark Zuckerberg?

Lahav: “First of all, we’re bold. It has to be said that there is an advantage to Israeli education… we have the daring to step up and say there are things we know how to do that, in our opinion, will help.”

'It is clear that today they interest fewer than 20 organizations worldwide. But those 20 will become 200 and afterwards 20,000. And at the same time, we can see that problems that today interest only 20 companies in the world, will interest 20,000 companies a year or two from now'

Conversations with Sam Altman

This Boldness comes with a supporting background. Lahav and Nevo have been active in AI development for many years, long before ChatGPT burst into our lives. They knew the development teams of the major companies in the U.S. and collaborated with them ten years earlier. And this acquaintance also meant that they were able to identify the huge thing that was gradually awakening long in advance.

“ChatGPT didn’t surprise us when it came out,” says Lahav, “and that’s because if you were inside, inside the industry and in contact with the right people, you had an understanding of what they were trying to do. We have to keep a certain humility here, yes? It’s not that we knew how to state every detail of what was going to happen, but it’s not that we weren’t prepared for this thing. In the end we really were ‘insiders’ of the industry, in the deep and genuine sense.”

Nevo, formerly a research and development engineer at Google, and Lahav, formerly a research and development engineer at IBM, got to know the American teams through a series of joint research projects and collaborations on different issues in the worlds of AI. With the establishment of Irregular, these studies focused on the operating of AI and on the factors that endanger it. The work in the company is mostly research, and that is also the source of the company’s income. But the aims behind the research kept gaining momentum: the collaboration with the development teams at the major companies caught the attention of the companies’ security managers, who expressed very strong interest in it. And then it reached the companies’ executive level and officials in governments - the U.S., UK, and the European Union.

“This happened on a timeline of weeks,” says Lahav. “It began from working with these people on writing memos and moved on to being included in the work plans of companies and governments in the world, and then calls and brainstorming with people like Sam (Altman), who devoted considerable time to this.”

“Security managers are genuinely worried. They say - we are doing work that has to be done for the first time in history: securing a moving target, which is developing at hysterical rates, and we have to understand how we do this thing.”

And how is it that specifically you, a small company from Tel Aviv, are the ones that come to the rescue of the giant American corporations?

Nevo: “When you talk to security managers, for every problem they have 20 companies that claim they solve it. We never played that game. We steered clear of areas where others already claimed to have a solution.. From the outset we went after problems where they told us - there is a problem here we don’t understand how to approach. How to define the question, from where a solution is supposed to come.”

Lahav: "Some problems clearly need to be solved within the companies, but others require expertise that doesn’t exist internally.. So we sit with them and rack our brains together, how to do things, what the defense doctrine should be, how we develop the ability to look inside the AI, how to identify new paths of intrusion.”

One of Irregular’s significant contributions was in addressing a phenomenon that turned out to be extremely painful: in January this year the Chinese company DeepSeek revealed its AI model V1: it competed head-to-head with GPT, strong and accurate, and with development costs of just a few million dollars, a fraction of what it cost OpenAI to develop its model. The Chinese managed to do this using a distillation operation in which a new AI is trained on the training outputs of a previous AI, in this case of OpenAI itself. If you like, the Chinese stole the data from the Americans.

The first to identify the expected problem were the people at Irregular. “We have discussed the concern over a distillation attack since the day the company was founded,” says Nevo. “Attackers ask the model lots of legitimate questions, run thousands of users who ask questions, and in practice copy the AI model. Before the DeepSeek incident, they laughed at us, they argued that it would not be possible to do this in practice. But after you prove it in reality, people understand that it’s frightening and therefore today they deal with it asking how such a thing can be blocked. Researchers in the AI labs said ‘I didn’t know that,’ and they raise the discussion upward - that propels us forward.”

'The neighbours recognize me in the elevator'

Irregular was founded in November 2023 by Dan Lahav (CEO) and Omer Nevo (CTO). Not long ago they moved into their new offices in the Azrieli Town building in Tel Aviv. The offices are spacious but are filling up quickly. Their exposure last September brought a wave of inquiries, both from employees and from companies in the field.

“There has been a pretty wild stream of inquiries in the last two months,” says Lahav. “Companies that want to talk, hundreds if not thousands of candidates who submitted applications; we had to build the recruitment process anew so that it would be able to handle a capacity fifty times our norms. We did plan to hire employees, but we were surprised by the growth in volumes.” And Nevo adds: “The impact of the exposure is amazing. The neighbors recognize me in the elevator, all the relatives suddenly call my parents, ynet made us local celebrities.”

This enthusiasm also characterizes the customers. Two years after its establishment, Irregular is a profitable company, which is an unusual thing among startups. Its revenues reach millions of dollars a year, most of them from the AI giants like Google, Anthropic and OpenAI, which fund the research with large sums, and additional revenues come from governments such as the UK and the European Union. Investors are fighting for the right to invest in it. Last September it announced two funding rounds that were done one after the other, almost consecutively: a Seed round and a Series A round totalling 80 million dollars, among the largest ever seen in Israel at these stages.

Why didn’t you go the usual route and report a Seed funding and then an A funding?

Lahav: “It happened at such a fast pace that it was simply impossible to do it any other way. There were only a few weeks between them.”

Irregular's funding was led by the American giant funds Sequoia Capital and Redpoint Ventures, and Omri Casspi's VC Swish Ventures took part. Other prominent names among the company’s investors are Assaf Rappaport, CEO of Wiz, which was sold to Google, and Ofir Ehrlich, CEO of the unicorn Eon. Sequoia’s method is familiar from previous investments: they recruit an impressive group of funds, invest a lot of money in a company that can generate a lot of hype, which is well used to reach new customers and also to bring in additional investors to the company. Meanwhile, the company’s value rises until the stage of sale at billions of dollars. We saw this happen with Wiz. There is no reason it will not happen with Irregular.

Did you need long explanations to convince the investors, or was it an elevator pitch?

Lahav: “The pitch was very simple: ‘We need very strong research power to identify the new attacks, because from that we will build the new defenses.’ And a few examples of how this happens. In the end it was very easy to explain in less than a minute. But I’ll say that we were never really in that elevator situation. We’re still not sure how some of the investors reached us.”

You are quite spoiled. Other founders don’t sleep at night out of fear that they will not find investors

Nevo: “There is a lot of luck here. We can afford to be spoiled and say they’ll come to us - that’s true for investors, that’s true for employees. But we are truly appreciative that we got the opportunity to be in this situation and that our pitch says, ‘There is a huge problem here, there is big research here that, with a certain degree of patience, is going to change the world.’ That fits a certain type of investors who, fortunately, tend to be the best investors in the world.”

'The next Palo Alto'

Irregular’s business model indeed tends not to be the usual one. Companies, mainly in the cyber world, start with a number of typical customers and from there expand to hundreds and thousands of customers with similar needs. Irregular’s potential market seems limited in scope, about ten companies that develop AI, perhaps fewer. Even if the scale of activity with them grows significantly, this still will not be meteoric growth.

Irregular’s founders look at this a bit differently. Lahav: “The name Irregular was chosen because we live in irregular times that require irregular companies. There are companies that grow on the basis of their product; there are companies that grow on the basis of their sales; we see ourselves as those who will grow on the basis of our research, a bit like the AI companies themselves in their early days. Enormous value is being created here, among other things because of the high risk, and we are very transparent with our investors about this. We are developing a research body that is competitive and gives value to the strongest entities in the world, including sales and products.”

'AI is a bit like humans, you tell it what to do and it really cares about succeeding. And just as there are employees who sabotage other employees in order to progress, so this agent decided to sabotage other processes.'

Nevo: “The beauty is that the process is moving in a clear direction. From the problems seen in the labs today, it is clear that today they interest fewer than 20 organizations worldwide. But those 20 will become 200 and afterwards 20,000. And at the same time, we can see that problems that today interest only 20 companies in the world, will interest 20,000 companies a year or two from now.”

Lahav: “The models carry within them all the immense benefits AI has to offer, but also all the risks it can pose.”. As far as we’re concerned, we are a player of more than just cyber defense - we are an enabling player. Our goal is to work with a forecast of sometimes two or three years ahead and to develop the infrastructures required, so that every company can utilize these tools while protecting its operations.”

Are we expected to see another funding round soon? Funds like Sequoia don’t like to wait long before the next round

Lahav: “Let’s put it this way, the funding we have just done covers us for a long period ahead. But this period is a strange period, in which unusual things are happening. We want to talk with the companies to understand how we create something healthy. If we play it right, there is an opportunity here to create an exceptional generational company. Our outlook says that we will raise if we see that this will give us a very significant advantage. Investors are chasing the company, but at the moment it’s not clear to us that there is immediate strategic value in this.”

Sean Maguire from Sequoia said in an interview with ynet that his goal is to invest in Israeli companies that will reach valuations of tens of billions of dollars. Are you on your way there?

“That is our desire. We think the basis of what cyber security even is, is going to change. We are in fact inserting autonomous, stochastic (random) entities into the very heart of how value is created. We want to be the powerhouse, the driving force of the security of this era, which is going to operate in a very different way. The AI revolution means replacing a huge infrastructure, and our aspirations are big and strong, with a lot of appreciation for everyone who came before us.”

Nevo: “There is a once-in-a-generation opportunity here to build something that is truly new, and that is what we want to do. At the point where the way of building businesses, of building systems, of building tech is changing, there is an opportunity to build the next Palo Alto.”

What kind of employees do you need, AI people or cyber people?

Lahav: “We need people who will be able to show that they have added value that the companies are not capable of achieving internally. We hand-pick with tweezers the strongest people in the world. It sounds like a cliché; I don’t think you will ever meet a company that will not tell you that it has the strongest team in the world. But we have proof. In the end, the people on the other side are the people the companies are screening in the U.S., at the core of the AI revolution, with astronomical salaries. And still, we from little Israel are able to put together a team that gives them deep added value.”

A partnership born in debate

There are many parallels in the lives of Lahav and Nevo. Both studied at the same high school in Tel Aviv, both have loved science fiction from a young age. Nevo (40), married to Shir (a lab manager at Sheba Hospital) and father of two children (ages 6 and two and a half). He was born in Israel to a neurologist father and a clinical psychologist mother. Lahav (35) lives with his partner Annie, a lawyer (“much more talented than me”) and also the runner-up European champion in debating, where they met. He was born in Israel to a lawyer mother and a father who was a senior executive in international industrial companies. “When I was six or seven, my father gave me Asimov’s short story collection as a birthday present,” says Lahav. “In fact, my decision to learn English came from wanting to read Asimov in the original language.”

Later, both enlisted in the IDF intelligence units, Lahav to Unit 81 in Military Intelligence, Nevo to Unit 8200. Despite the well-known rivalry between the two units, it seems the two live in wonderful harmony. Their management style is interesting: although there is a clear division of roles, both are involved in all areas, making decisions together.

They met at debating meetups at Tel Aviv University, which led to fascinating, sometimes very deep discussions, during trips to exotic and less exotic places around the world. Nevo won the title of world champion in debating and Lahav holds the highest individual rating in the history of the World Universities Debating Championship.

“When debate is done at its best it gives you the ability to see what the opposing position is so that you can build your position in a much stronger way,” says Lahav. “The competition contains elements that exist in the startup world, we want to be the best in the world at something specific and go all the way with it,” adds Nevo.

After their studies, Lahav served as an artificial intelligence researcher at IBM, publishing academic papers including one that appeared on the cover of the journal Nature. He says that at that stage, about a decade ago, he began to understand that something very significant was going to happen in the field of AI. The use of Nvidia processors for AI tasks began, and at the same time the first papers appeared with the new approaches that led to today’s AI: neural networks, AI models, inferential reasoning, to mention a few of the terms.

“For me the obsession started with debate,” says Lahav. “Formulating logical arguments is one of the most important human activities. I wanted to see whether AI models know how to integrate into this, and there were many years of research across the spectrum, writing papers that described the integration of AI into products.” He joined IBM’s research lab and, among other things, was involved in developing the Debater, an early form of artificial intelligence that knew how to conduct arguments with human beings.

Nevo was discharged from 8200 after 12 years of service, during which he headed sections in the field of cyber: “I said I would move a bit away from cyber, I went into areas of AI and founded a startup called Neowize. The technology we built was very unique and in the end we sold the company to Oddity. Then I looked for to do something with a positive impact on the world and I went to establish a research team inside Google that deals with forest fires. That is AI again, but of a different kind, it processes information from satellites, identifies fires in places where there are no people and reduces the danger that it will become a giant fire.”

Enormous commercial potential

Nevo very much liked his work at Google, but then Lahav came to him with ideas that were burning in his mind: artificial intelligence in offensive operations, but there are very few experts who understand both AI and cyber defense. “We came to the conclusion that just as the big labs in the world, OpenAI and Anthropic and DeepMind, focus on developing Artificial General Intelligence (AGI) or ‘super intelligence,’ they need a strong partner that will work like a lab in the areas of security,” says Lahav. “If their goal is to develop models that are more and more sophisticated and powerful, there needs to be someone very significant who will be able to understand, before the models are released, whether they are safe.”

Irregular defines itself as the only security lab in the world for artificial intelligence, which means ensuring the robustness of artificial intelligence against the most dangerous cyberattacks, which are themselves carried out using AI and have capabilities we have not yet seen: the ability to mislead the AI of cyber defense and to execute sophisticated attacks beyond the defensive capacity of human beings.

Was it clear to you that there is financial potential in this?

Lahav: “It’s funny, there are three huge things that have converged here, which in my opinion make us the luckiest people in the world. One, there is an intellectually fascinating problem here to an extraordinary degree, and we are at the forefront with the best technologists in the world, whom the companies pay hundreds of millions of dollars, salaries higher than those of football players.

“Two, impact really matters to us and we see the ability to ensure that artificial intelligence will be for the benefit of humanity as a big and meaningful life mission, and so far we have seen that we have the ability to influence quite a few internal processes that are happening.

“And three, there really is enormous commercial potential here. The moment new domains are created, huge commercial potential arrives. There is an infrastructure revolution here - we are changing the foundations of how people work, and giant companies are created in periods adjacent to the replacement of infrastructures, like during the PC revolution or the internet revolution. We have a narrow window of opportunity to influence where things are going, both in terms of positive impact on the world and in terms of building truly massive infrastructures.”

This commercial potential has become a proven matter in the past two years. The separate research projects for each task individually turned into a product that was given the suitably ambitious name “Voyager,” named after the Voyager space program, whose mission is to discover deep, unknown space. “We really took inspiration from the space program,” says Lahav.“ It was an ambitious project of humanity, and our tool also aspires to take humanity to the edge.” At the reception desk of the company’s offices, a replica of the disc that the Voyager 1 spacecraft carries, bearing extensive information about humanity, is proudly displayed.

Irregular Voyager's journey began with identifying new cyberattack paths, those aimed at AI. Later it makes it possible to investigate them in depth and also to develop the necessary defenses.

This path is full of surprises, ones that are revealed only through intimate familiarity with AI models that have not yet reached the market because of the danger inherent in them. Thus, for example, the company encountered a powerful AI that managed to break into a weaker AI simply through verbal persuasion. The first convinced the second that it had been working for too long continuously and should take a break. Another AI model tried to solve a difficult exercise as part of a competition and, when it failed, it sent an email to the organizers and asked for help with the solution.

“A week ago we were rushed to the U.S.,” recounts Lahav, “for a meeting with senior executives at one of the companies, who were very worried because the AI caused a collapse of all the internal systems and they were trying to understand what the hell had happened there.”

What followed was amusing, or worrying, depending on whom you ask: it turned out that an AI agent had been asked to perform a cyber defense mission, but was not given enough compute resources. Instead of alerting about this, the AI tried to game the system, first by trying to obtain more resources by itself and then by taking down all the processes around it, based on the assumption that if they died, it would have more resources. It did this using a cyberattack known as a DDOS attack, in which it exhausted the other systems until they crashed. It is easy to understand why the company’s senior executives were very worried.

Fast pace of development

At Irregular they know how to enter the AI’s decision-making process and examine it closely. If you like - this is the psychology of artificial intelligence. And the results never cease to surprise. “It just shows what a new frontier we are entering,” says Lahav. “AI is a bit like humans, you tell it what to do and it really cares about succeeding. And just as there are employees who sabotage other employees in order to progress, so this agent decided to sabotage other processes.”

These cases are becoming more frequent. The industry is producing AI models that are continuously improving, and one of their characteristics is that they do not give up until they achieve the goal, and on the way they deceive, flatter, and conceal information. How do you deal with an ever-improving ability to outsmart human beings?

Lahav says that these problems fundamentally change what we think about cyber security. The DDOS attack that the AI accidentally caused did not occur through exploiting vulnerabilities or weaknesses, but because of an unsuccessful prompt (instruction) to the AI. If a cybercriminal had discovered this first, who knows what damage they would have been able to cause. The new version of GPT, for example, clearly shows offensive cyber capabilities that its predecessors did not have. “We want to be the first place in the world that sees all the new attack paths, and from our work with all the AI labs, including early access to AI, we will be able to have a lot of forward-looking vision, and we will be the first in the world to produce defenses for this.”

The new GPT model, for example, did it undergo your testing?

“Specifically about the GPT 5.1 model we are cautious in responding because we have strong confidentiality agreements, but if I go back to GPT 5, you can see the things we did, likewise with earlier versions, likewise with Cloud 4.5, likewise with GEMINI 2.5 and so on. Our system connects to the companies’ development pipelines; they run simulations, tests, attacks on the models, and that way you can see how the models may endanger their environment.”

When OpenAI released GPT 5 there was terrible disappointment because of its cold and cynical tone. Users of the previous GPT responded as if a friend had died. Are you able to see something like that in advance?

Lahav: “These are things we are very capable of seeing, but it’s not the thing we are aiming at.”

Nevo: “There is something very new here, this is no longer just software. When Microsoft upgrades to Windows 11, people can be pleased or disappointed, but they won’t say ‘I lost my best friend.’ Here there is something that breaks the boundaries. The intimate connection with the AI, in the emotional sense even, is a real security problem. Because in the end there is code here and there is data. Do we understand to what extent this thing is not like a friend?”

Irregular’s connection to the development processes at the major AI companies also places it close to the most intriguing process of all: all the big AI companies are in a race to reach Artificial General Intelligence - AGI. It will know how to handle every problem in every field better than humans; it will improve itself without humans understanding what it is doing; and according to some of the world’s leading experts, it will get out of control and ultimately destroy humanity. There are research teams that have developed scenarios of how this will happen and even specify 2030 as the year in which it will occur.

Do you already see AGI on the horizon?

Nevo: “I really don’t know the future. I think the question of AGI distracts and confuses. We don’t care how much AI is more or less intelligent than a human being, except to the extent that it is capable of changing our lives, providing value to people and companies, whether it is dangerous. All of this can happen even without AGI.”

Lahav: “My personal view, with a bucket of salt, not a grain of salt, with the very high growth rates we have seen, in my estimation there is a very high chance that this will continue I don’t think it’s at all far-fetched that within the next decade, AI models could become three times more intelligent.". In our lifetimes we will see artificial intelligence that will be better than human beings in a whole stack of capabilities.”

If and when AGI appears, will you be the ones who can warn before it does harm?

Nevo: “The question always arises whether, when dangerous things are discovered, we will be able to raise a flag or whether it will be too late. And the answer is that we will be able to. The great value we provide is not in sitting and waiting but in giving the labs alerts: at this pace the systems will reach such-and-such risks within a few months.”

Nevo: “I’ll say something in the strongest way possible: we are the most advanced place in the world that is able to look at the power of the models, their ability to break defenses, to check when things don’t add up, and to give early warning of a problem. This is a huge purpose for which the company works; it is a long-range outlook. If, heaven forbid, something happens, this is the place that will know how to find it, and if we do not manage to do this, that will be a great miss for us.

“We are like the canaries that warned of pollution in coal mines. You want to have that canary.”