Around the eighth century BC, Homer, one of ancient Greece’s most celebrated poets, composed his two renowned epics: the Odyssey and the Iliad. Remarkably, even then, nearly 3,000 years ago, Homer alluded to technologies that, by today’s standards, would undoubtedly be considered forms of artificial intelligence. The Odyssey mentions autonomous vessels:

More stories:

“Our ships may shape their purpose accordingly and take you there.

For the Phaeacians have no pilots;

Their vessels have no rudders as those of other nations have,

But the ships themselves understand what it is that we are thinking about and want," The Odyssey by Homer (translated by Samuel Butler).

And, in the Iliad, one can find allusions to robotics with artificial intelligence:

“There were golden handmaids also who worked for him,

And were like real young women,

With sense and reason,

Voice also and strength,

And all the learning of the immortals,” The Iliad by Homer (translated by Samuel Butler).

Fast forward to the early 20th century. While the electronic computer was still a few decades away from its inception, the visionary thinker and ideologist A.D. Gordon foresaw in his article Man and Nature that in the future, art will also rely on technology: "Some predict that in the future, creation will shift from being purely an artistic endeavor to becoming an intellectual pursuit entailing intelligent mechanisms and sophisticated combinations." Today we witness the fulfillment of his words, as AI-driven photo-generating and text-generating bots are reshaping the landscape of art and creative expression.

Decoding the mechanisms behind thought

Although artificial intelligence has been present in human thought as a concept for thousands of years, the scientific breakthroughs that allowed it to materialize largely emerged in the past century. After centuries of abstract philosophical contemplation about the nature of thought, the field took a pragmatic turn when researchers and theorists began to formulate practical tools for the logical and formal definitions necessary for the materialization of a thinking machine.

The year 1943 was pivotal in the development of the fundamental principles of artificial intelligence. During this year, American mathematician Norbert Wiener, Mexican physiologist Arturo Rosenblueth, and American engineer Julian Bigelow applied the principles of behaviorism to the field of psychology. Behavioral psychology approaches the study of animal behavior, and particularly human behavior, by examining the relationship between an individual's behavior and the stimuli they receive from their external environment. To adopt a behavioral approach to the study of computational systems, the three applied similar tools to the study of technological systems.

7 View gallery

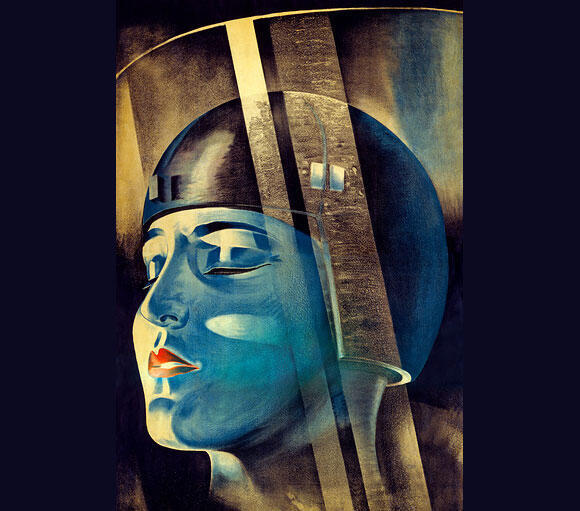

A robotic female figure featured in the movie poster for Metropolis, 1927

(Source: LIBRARY OF CONGRESS / SCIENCE PHOTO LIBRARY)

In their paper "Behavior, Purpose and Teleology" they discussed the behavior of a system and the stimuli it receives from the environment in terms of output and input. They categorized potential system behaviors into two categories: those with a defined goal or purpose and those without. A purposeful system is one that aims to achieve a particular end state. While this definition may seem perplexing, as it appears unnecessary to create a purposeless device, there exist numerous examples of such technological systems. For instance, clocks efficiently fulfill their function even though they are not designed to reach a specific end time, while a roulette wheel is inherently structured to reach a random final state rather than a predetermined one.

According to the researchers, systems that do behave purposefully need feedback from the environment as input, particularly negative feedback, which restrains the system when it tends to exhibit undesired behavior, much like how a governor stabilizes the speed of steam engines. In the same way, biological systems, such as the brain, can be viewed as feedback and control systems, and down the road, the principle of negative feedback would serve as an important tool in the design of thinking systems.

In that same year, American neurophysiologist Warren McCulloch and American logician Walter Pitts published the paper "A logical account of ideas immanent in nervous activity". At that point, a basic understanding of the structure of the nervous system had already formed.

It was known that the nervous system consists of nerve cells, called neurons, which communicate with each other through electrical signals that travel along elongated fibers called axons. These signals eventually converge at synapses, the junctions between the axon of one neuron and the body of another. Each neuron has an internal threshold of electrical voltage, and when this threshold is exceeded, the neuron "fires", transmitting the signal to the neurons connected to it. Any such signal can either raise or lower the electrical voltage in the target cell, and when the voltage surpasses a certain threshold, the neuron also "fires", propagating the signal to the following neurons in the neural network.

Using mathematical tools McCulloch and Pitts examined how a collection of structural units, arranged in a network and adhering to the basic rules of nerve cells, could produce complex operations. They also raised the possibility that the mathematical structure they defined, which represents a network of human nerve cells, could be used as a Turing machine - an abstract concept introduced in 1936 by mathematician and computer scientist Alan Turing to describe the operation of a computer. Later, as our knowledge of the brain deepened, it became clear that the model of McCulloch and Pitts was overly simplistic, but it was sufficient to form an orderly parallel between human brain functions and computational processes.

Both of these articles were pioneering in the way they addressed cognitive systems as mechanistic action networks that can be dissected using logical tools. These networks operate on the principle of feedback as a control mechanism, responding to external signals and capable of generating actions. All of this is based on a very basic biological understanding of the nervous system, drawing parallels with the world of electronics, based on switch components that either transmit or do not transmit electrical voltage.

In 1947, Wiener published his seminal book "Cybernetics", which launched a new field in the study of computing systems, treating information as the raw material in systems that engage in communication with each other. In this context, there is no essential difference between an electrical circuit and communication systems involving humans or animals. In both cases it is a matter of transmitting information between entities involved in the operation.

Can machines think?

In an article penned by Alan Turing in 1947, but published only two decades later, Turing grappled with a profound question - Can a machine think? He pointed out that since it is possible to create devices that mimic the action of mechanisms in the human body, it is likely that an artificial thinking mechanism can also be created. For example, a recording microphone fulfills a task similar to that of the ear and a camera copies the action of the eye. Therefore, when it comes to thought, we seek to imitate the operation of the nervous system.

Turing added and argued that although it is possible to construct electrical circuits that mimic the way the nervous system works, there is no point in striving for an exact replica of the brain using technological tools. It would be like creating "cars that walk with legs instead of wheels," he noted. Similar to the nervous system, electrical circuits can transmit information and even store it, but the nervous system is much more efficient - it is extremely compact, consumes very little energy, and is resistant to the ravages of time. A baby's brain, he stated, is equivalent to an unstructured mechanism primed to absorb laws and knowledge from the world in order to learn how to navigate it. Similar to behavioral psychology, he proposed methods to teach an artificial thinking mechanism, similar to the way children learn, based on a system of rewards and punishments.

In another paper, from 1950, Turing proposed what is now known as the Turing Test, devised to distinguish whether a machine has true intelligence. The structure of the test is very straightforward: an individual, unable to see their conversational partner, engages in natural language conversation with the other party. If they are unable to distinguish whether they are conversing with a human or a machine, then the machine demonstrates the capacity of thinking.

The next significant advancement was the work of the versatile mathematician John von Neumann, whose ideas enabled, among other things, the development of ENIAC, the first electronic computer that could be programmed by rewiring. In an article from 1951, von Neumann expressed concern about the lack of a formal, logic-based theory of automata - the abstract representation of computational machines - that would enable the implementation of the properties of the nervous system in an artificial network. Furthermore, in an extension to the comparison presented by McCulloch and Pitts between the human brain and computational machines, he argued that anything that can be formulated precisely and unambiguously in words, can be implemented in a neural network.

First steps

One of the early attempts to develop technology inspired by these ideas was the Perceptron. In 1958, American psychologist Frank Rosenblatt introduced a device designed to distinguish between two categories, for example between a picture of a man and that of a woman. The machine consisted of several layers: the first was composed of light receptors designed to imitate the function of the eye’s retina. The receptors were connected to a second layer, which consisted of artificial neurons. Each neuron concentrated input from several receptors and decided, according to the signals it received, whether to mark 0 or 1. Ultimately, the collective decisions from all the neurons were weighed together resulting in a final decision of 0 or 1. This final output was intended to determine which of the two possible categories the perceived visual input belonged to.

The device underwent a process of learning through feedback given to it for its decisions. It is reported that Rosenblatt's machine learned to differentiate between right and left markings and even between pictures of dogs and cats.

The publication of the findings caused a great uproar. The New York Times reported that the "artificial computer embryo" would eventually learn to walk, talk, see, write, reproduce itself and become aware of its very existence." However, when the initial uproar subsided, the limitations of the system became apparent. It was only able to differentiate between simple groups, and its range of capabilities was narrow. The machine’s structure was too simple for complex tasks.

In a 1961 film, British neurobiologist Patrick Wall raised an interesting question: Would machines be able to come up with a new idea? After all, when groundbreaking scientists like Isaac Newton, Charles Darwin or Galileo Galilei conceived their great ideas, they had to abandon the laws and basic assumptions previously considered true. Machines perform actions as instructed in advance. They obey the rules dictated to them by humans.

Therefore, hand in hand with the development of the mathematical approaches to analyzing the capabilities of artificial networks and the initial steps in implementing the technology, opinions varied widely. Alongside the vision and hope, considerable doubts arose regarding the possibility of the existence of features such as creativity, innovation and independence in a system that could be called "artificial intelligence".

The birth of artificial intelligence

In 1955, Claude Shannon and John McCarthy encountered a problem when they approached the editing of a collection of articles dedicated to automata. A significant portion of the papers submitted to them were dedicated to the mathematics behind computability and did not attempt to tackle the pressing issues, such as whether machines can play games or think. In response, the two, in collaboration with some of their colleagues, organized a special summer conference at Dartmouth College in New Hampshire, which would bring together in one place the best researchers from all fields associated with intelligent machines, sharing a common objective.

The participants of the Dartmouth conference were a magnificent gallery of researchers from all fields related to artificial intelligence: mathematicians, physicists, psychologists and electrical engineers, a combination of academic researchers alongside representatives of the practical side of the computer industry.

Several of the participants achieved renown either prior the conference or shortly after it: Shannon worked at Bell Laboratories and became famous for his groundbreaking contribution to information theory; Marvin Minsky received the Turing Award in 1969, which is considered the most prestigious award in computer science; also notable were Warren McCulloch and Julian Bigelow, mathematician John Nash, who received the Nobel Prize in Economics in 1994 for his work on game theory, and psychologist Herbert Simon, winner of the Turing Prize (1975) and the 1978 Nobel Prize in Economics.

Despite their varied backgrounds and different approaches to the idea of artificial intelligence, they all shared a similar vision. They believed that intelligence is not limited only to biological beings, and that thought and learning could be formulated by mathematical means, and implemented using a digital computer.

Not all participants adhered to the originally intended two-month duration, and some were content with only one month or even a mere two weeks. Immediate outcomes of the symposium appeared rather limited and did not quite justify the great aspirations of its organizers. Nevertheless, this gathering is frequently regarded as the birthplace of artificial intelligence as a distinct field.

Beyond the fact that this was the first instance in which the term "artificial intelligence" was used to describe the field, the conference also delineated its boundaries and constituent elements. For the first time, leading researchers, each having tackled questions of artificial intelligence individually in their respective field of expertise for years, convened with the shared purpose of advancing the discipline. It was at this gathering that the central questions of the field were formulated and crystallized, and future goals and aspirations were collectively articulated.

In the decades to come, the AI of the 1940s and 1950s would undergo significant evolution and refinement. It transitioned into new forms, such as those of a checkers player and chess master, acquired abilities such as maze-solving, developed conversational skills and briefly grappled with computational limitations, during what would later be termed "the first AI winter". As we know today, AI has managed to emerge from this period of stagnation, breaking new boundaries daily. And yet, even today, some of the most fundamental questions, about the nature of intelligence and the potential of a computer to generate truly novel thoughts, remain unresolved.