Nvidia announced Monday the NVIDIA Nemotron 3 family of open models, data and libraries designed to power transparent, efficient and specialized agentic AI development across industries.

The Nemotron 3 models, available in Nano, Super and Ultra sizes, introduce a breakthrough hybrid latent mixture-of-experts architecture that helps developers build and deploy reliable multi-agent systems at scale.

As organizations shift from single-model chatbots to collaborative multi-agent AI systems, developers face mounting challenges, including communication overhead, context drift and high inference costs. They also require transparency to trust the models that automate complex workflows. Nemotron 3 directly addresses these challenges, delivering the performance and openness needed to build specialized agentic AI.

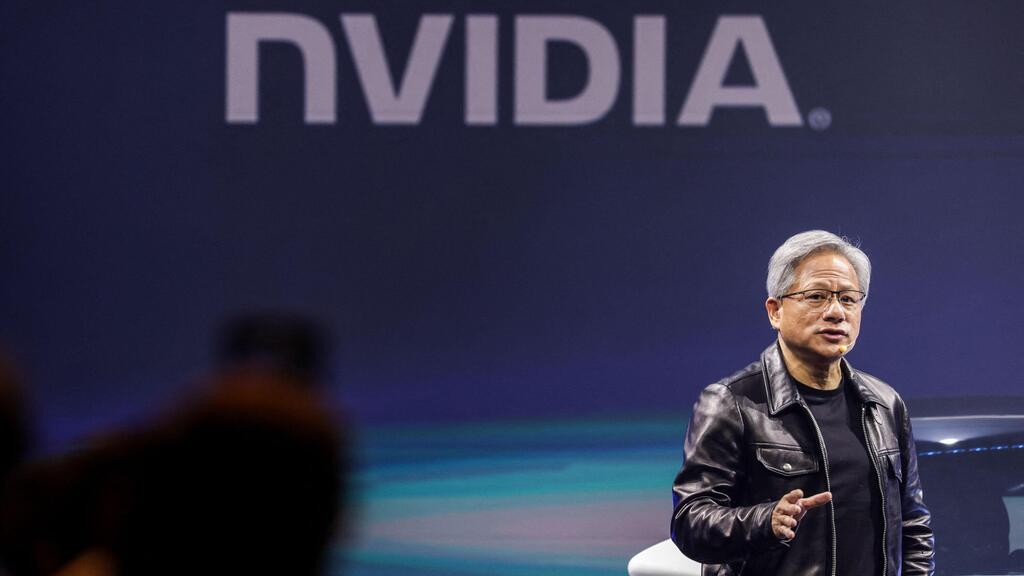

“Open innovation is the foundation of AI progress,” said Jensen Huang, founder and CEO of NVIDIA. “With Nemotron, we are transforming advanced AI into an open platform that gives developers the transparency and efficiency they need to build agentic systems at scale.”

NVIDIA Nemotron supports the company’s broader sovereign AI efforts, with organizations from Europe to South Korea adopting open, transparent and efficient models that allow them to build AI systems aligned with their own data, regulations and values.

Early adopters, including Accenture, Cadence, CrowdStrike, Cursor, Deloitte, EY, Oracle Cloud Infrastructure, Palantir, Perplexity, ServiceNow, Siemens and Zoom, are integrating models from the Nemotron family to power AI workflows across manufacturing, cybersecurity, software development, media, communications and other industries.

“NVIDIA and ServiceNow have been shaping the future of AI for years, and the best is yet to come,” said Bill McDermott, chairman and CEO of ServiceNow. “Today, we are taking a major step forward in empowering leaders across all industries to fast-track their agentic AI strategy. ServiceNow’s intelligent workflow automation combined with NVIDIA’s Nemotron 3 will continue to set the standard for efficiency, speed and accuracy.”

As developers move toward multi-agent AI systems, they increasingly rely on proprietary models for advanced reasoning while using more efficient and customizable open models to reduce costs. Routing tasks between frontier-level models and Nemotron within a single workflow gives agents maximum intelligence while optimizing token efficiency.

“Perplexity is built on the idea that human curiosity is amplified by accurate AI embedded in exceptional tools such as AI assistants,” said Aravind Srinivas, CEO of Perplexity. “With our agent router, we can direct workloads to the best fine-tuned open models, such as Nemotron 3 Ultra, or leverage leading proprietary models when tasks benefit from their unique capabilities, ensuring our AI assistants operate with exceptional speed, efficiency and scale.”

Open Nemotron 3 models enable startups to build and iterate faster on AI agents and accelerate innovation from prototype to enterprise deployment. Portfolio companies at Mayfield are exploring Nemotron 3 to build AI teammates that support human-AI collaboration.

“NVIDIA’s open model stack and the NVIDIA Inception program give early-stage companies the models, tools and cost-effective infrastructure to experiment, differentiate and scale quickly,” said Navin Chaddha, managing partner at Mayfield. “Nemotron 3 gives founders a running start in building agentic AI applications and AI teammates, while helping them tap into NVIDIA’s massive installed base.”

Nemotron 3 reinvents multi-agent AI with efficiency and accuracy

The Nemotron 3 family of mixture-of-experts models includes three sizes:

Nemotron 3 Nano, a 30-billion-parameter model with 3 billion active parameters, designed for targeted, highly efficient tasks.

Nemotron 3 Super, a high-accuracy reasoning model with approximately 100 billion parameters and 10 billion active parameters, built for multi-agent applications.

Nemotron 3 Ultra, a large-scale reasoning engine with roughly 500 billion parameters and 50 billion active parameters, designed for complex AI applications.

Available today, Nemotron 3 Nano is the most compute-efficient model in the family, optimized for targeted tasks such as software debugging, content summarization, AI assistants and information retrieval at low inference costs. The model uses a unique hybrid mixture-of-experts architecture that delivers gains in efficiency and scalability.

This design achieves up to four times higher token throughput compared with Nemotron 2 Nano and reduces reasoning token generation by up to 60 percent, significantly lowering inference costs. With a one million token context window, Nemotron 3 Nano retains more information, improving accuracy and long-horizon reasoning across complex, multistep tasks.

Artificial Analysis, an independent AI benchmarking organization, ranked the model as the most open and efficient in its size category, with leading accuracy.

Nemotron 3 Super excels in applications requiring many collaborating agents to perform complex tasks with low latency. Nemotron 3 Ultra serves as an advanced reasoning engine for AI workflows that demand deep research and strategic planning.

Nemotron 3 Super and Ultra use NVIDIA’s ultra-efficient 4-bit NVFP4 training format on the NVIDIA Blackwell architecture, significantly reducing memory requirements and accelerating training. This efficiency enables larger models to be trained on existing infrastructure without sacrificing accuracy compared with higher-precision formats.

With the Nemotron 3 family, developers can choose open models that are right-sized for their workloads, scaling from dozens to hundreds of agents while benefiting from faster, more accurate long-horizon reasoning for complex workflows.

New open tools and data for AI agent customization

NVIDIA also released a collection of training datasets and reinforcement learning libraries available to anyone building specialized AI agents.

Three trillion tokens of new Nemotron pretraining, post-training and reinforcement learning datasets provide rich reasoning, coding and multistep workflow examples to support the creation of highly capable, domain-specific agents. The Nemotron Agentic Safety Dataset supplies real-world telemetry to help teams evaluate and strengthen the safety of complex agent systems.

To accelerate development, NVIDIA released the open-source NeMo Gym and NeMo RL libraries, which provide training environments and post-training foundations for Nemotron models, along with NeMo Evaluator to validate model safety and performance.

All tools and datasets are available on GitHub and Hugging Face.

Nemotron 3 is supported by llama.cpp, SGLang and vLLM. Prime Intellect and Unsloth are also integrating NeMo Gym’s ready-to-use training environments directly into their workflows, providing teams with faster and easier access to advanced reinforcement learning training.

Getting started with NVIDIA open models

Nemotron 3 Nano is available today on Hugging Face and through inference service providers including Baseten, Deepinfra, Fireworks, FriendliAI, OpenRouter and Together AI.

Nemotron is also offered on enterprise AI and data infrastructure platforms, including Couchbase, DataRobot, H2O.ai, JFrog, Lambda and UiPath. For public cloud customers, Nemotron 3 Nano is available on AWS through Amazon Bedrock in serverless mode and will soon be supported on Google Cloud, CoreWeave, Nebius, Nscale and Yotta.

Nemotron 3 Nano is also available as an NVIDIA NIM microservice, enabling secure and scalable deployment on NVIDIA-accelerated infrastructure with maximum privacy and control.

Nemotron 3 Super and Nemotron 3 Ultra are expected to be available in the first half of 2026.